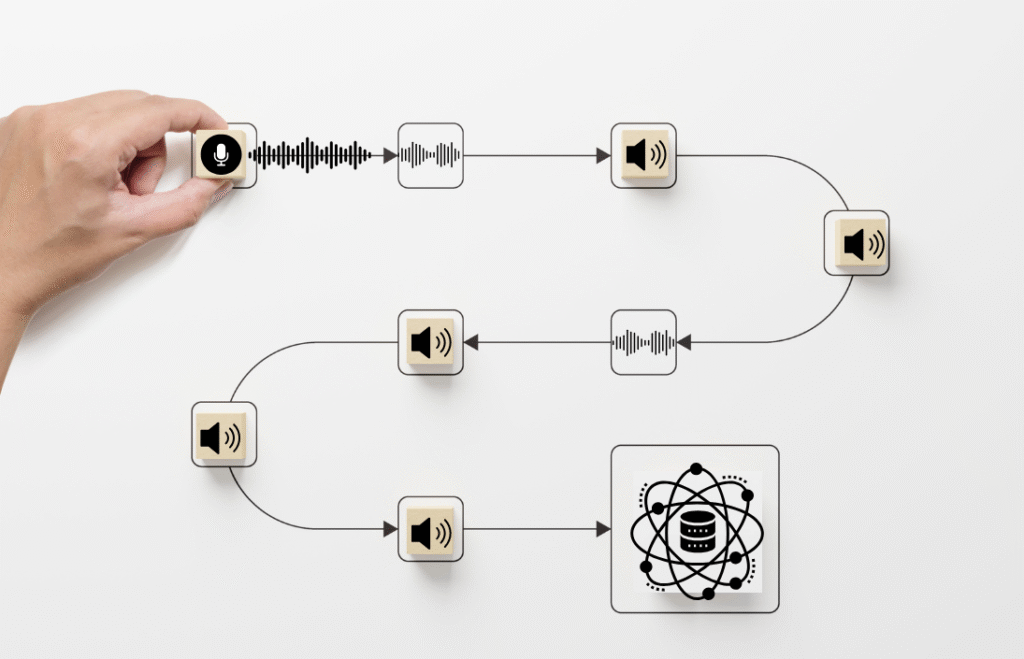

Our robust daily data generation system operates through a streamlined production pipeline designed for consistent, high-volume output. We maintain active relationships with diverse speaker networks across multiple regions, enabling continuous audio collection that feeds our processing systems every day. Our automated workflow management ensures that newly collected audio immediately enters our quality control pipeline, where it undergoes cleaning, transcription, and validation processes simultaneously. This systematic approach guarantees that fresh, market-ready datasets are available daily, providing AI companies with the consistent data supply they need for ongoing model training and improvement initiatives.

Our daily production process seamlessly integrates human quality assurance at every stage, ensuring that speed never compromises dataset excellence. Each day’s production batch is carefully managed by our experienced team who coordinate comprehensive quality protocols, from initial audio cleaning through final validation checks. Our dedicated coordinators manage speaker availability, transcription workflows, and quality review cycles with human oversight to maintain steady output without bottlenecks. This human-centered approach means that every dataset generated meets our premium standards through expert attention and care, giving clients predictable access to fresh training data that reflects the superior quality only human expertise can provide.